This is part 5 in a series of articles on using Azure Resource Manager (ARM) and JSON templates to create a full Remote Desktop Services environment in Azure IaaS.

Let’s briefly reiterate what was previously covered on this subject. It all started with a first RDS deployment on Azure IaaS covered in the article Full HA RDS 2016 deployment in Azure IaaS in < 30 minutes, Azure Resource Manager FTW! Although this first template already creates a high available RDS environment on Azure, many improvements and features have been added after that. In a second article called RDS on Azure IaaS using ARM & JSON part 2 I covered adding SSL certificate management, the creation of Session Collections, and setting session time out settings. To help visualize what is going on during the automated creation of the environment I created a third article in which I published 2 short videos’ which were also shown at Microsoft Ignite 2016. Up until now the automated RDS deployment was based on the default Windows Server 2016 TP5 image in Azure for the RD Session Host role. Last week after Windows Server 2016 became GA, I updated the deployment to support that version. For demo or PoC environments the default image is ideal, however is most scenarios your, for example, finance department would not be using calculator as their primary published application. In a forth article I covered adding a Custom Template Image for the RD Session Host servers to be able to publish custom applications. This article also briefly touched on RD Licensing configurations, and basic RD Web Access customizations.

So, back to part 5 of the series. We’ll take everything covered in the previous articles as a starting point and are building on top of that. What features are we adding in this version?

- Configurable Active Directory OU locations

- Securing our Custom scripts in Azure blob

- Load Balancing the RD Connection Broker role

- Restricting access to only allow specific groups

Active Directory OU location

In previous versions of the ARM template all servers containing RDS roles created by the JSON template ended up in the default Organizational Unit (OU) in Active Directory. While it’s relatively easy to move these computer objects to a designated OU structure after the domain join process, it’s a good common practice to create computers objects in the designated OU directly during the domain join. Not only does this save time in the process, in some scenario’s where the default OU might have been changed, unwanted Group Policy Objects could be applied to these new servers. Let’s take the following OU structure as an example.

To accommodate the creation of the RD servers inside a custom OU structure, I have added the following parameters to the environment.

As part of this change I decided to also move away from using Desired State Configuration (DSC) for the domain join process. Instead of DSC I moved to using an extension directly in ARM. There is a good example available on this extension as part of Microsoft’s Azure Quick Start Templates: 201-vm-domain-join.

Below you can see the extension in action. The type needs to be configured as “JsonADDomainExtension”. And as part of the settings, the OUPath can be provided.

As a result, the server objects are created in the designated OU’s as part of the domain join process.

Securing the Custom scripts

As discussed in previous blog posts, I use the CustomScriptExtension in ARM to be able to launch a PowerShell script to perform the actual deployment of the RDS roles and further configuration. The idea behind the CustomScriptExtension is that you provide a download location where the VM can download the script and execute it. There are various options to store these files. In some cases, you might want to share your scripts with others, in those cases GitHub is ideal. This is also the location where Microsoft provides many examples as part of the Azure Quickstart Templates they provide. In some cases, you might not want to use GitHub to store the scripts publically. You might have developed a custom script that you only want to use inside your organization. In those cases, its’ very convenient to place those files in a container in Azure in the same subscription where you also deploy the RDS environment. As I’ll discuss later on in this chapter, you can also use this method to secure your custom scripts and prevent others from downloading them. Let’s take a look at how to configure this.

First of all, you need a location (storage account) in the Azure Subscription you want to use. Inside this storage account we create a new container.

We can now use this container to upload our custom scripts. Although you can do this using the Azure Portal or using PowerShell, I prefer to use the Microsoft Azure Storage Explorer. This is a small tool you can download and install on macOS, Linux, and Windows. Get the download here: http://storageexplorer.com/

Using the Azure Storage Explorer, you can easily manage the files inside the blob storage.

Inside our ARM deployment we can now refer to this location in Azure as our custom scripts location to download the script, we provide this URL inside a parameter.

Although the scripts are now stored inside our Azure Subscription, they are still publically accessible. Anyone that would know the location could browse to the scripts and download them. Again, in some scenarios that might not be an issue if the files don’t contain any sensitive data. But in some scenario’s you will want to prevent public access to the scripts.

In order to create a secure location, the access type of the container needs to be changed to Private.

Setting the Access type to Private ensures that there is no anonymous access. Because of this ,the data inside the container cannot be accessed without providing the access key of the storage account.

Now that the container is set to private, how do we access the scripts from within our ARM deployment? Using the same CustomScriptExtension as before we provide the location (URL) of the script as part of the fileUris settings. To be allowed to access the script from within our ARM deployment, we provide both the storageaccountname as well as the storageaccountkey. To make sure these are encrypted we place them inside the ProtectedSettings section of the CustomScriptExtension.

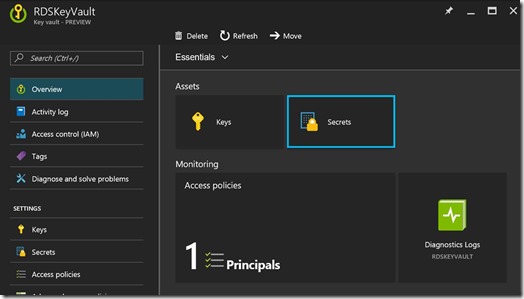

And to make things fully secure we obviously don’t store the storageaccountkey in plain text in our scripts, but rather store the storageaccountkey as a secret inAzure Keyvault.

Using a KeyVault reference we can safely access the storageaccountkey in our ARM deployment

Using this method, we have now created a secure location to store scripts and other files that can be leveraged by our ARM deployment.

Load balancing initial connection RD Connection Broker

In previous versions of the ARM deployment I’ve setup a load balancer to provide high availability and load balancing for the RD Web Access and RD Gateway role. This load balancer, also created by ARM, is equipped with a public IP address to be able to access the environment from the outside. But what about load balancing of the RD Connection Broker role? As you might know, since Windows Server 2012, the RD Connection Broker always handles the initial connection of any user connecting to the environment. Although this process is transparent to the end user, any incoming session will be connecting to the RD Connection Broker first via RDP (3389). The RD Connection Broker then redirects the session, resulting in another connection toward the final destination, one of the RD Session Host servers. This process is explained in more detail here. So in a scenario where we have multiple RD Connection Brokers configured in a High Available scenario, we ideally also make sure we load balance this initial connection. Although this can also be performed by using DNS Round Robin, DNS RR is not aware of RD Connection Broker servers that unreachable and the workload will not be divided equally in most scenario’s. Instead of DNS RR, lets leverage the same type of Azure load balancer we used for the RD Web Access and RD Gateway role, this time load balancing an internal connection.

Since I didn’t cover the load balancer in much detail in previous articles, let’s take a closer look at how this is configured. Inside our ARM deployment we create a new Load Balancer resource as specified below. We place it in the same subnet as the RDS environment and provide a static internal IP Address.

Inside the RD Connection Broker resources in the ARM template, we specify the load Balancer Backend Address Pools property referring back to the pool we also specified above. This is to make sure both RD Connection Broker servers will become members of the pool, and thus become the to be load balanced servers.

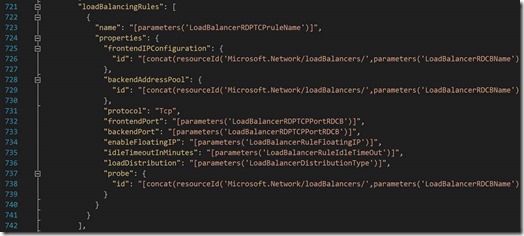

Next, we define the load balancing rules. For this scenario we specify will want to load balance TCP port 3389 and set additional parameters for setting like FloatingIP, Idle Time out etc.

Lastly, we configure the probes. By configuring a probe, we tell the load balancer which ports to probe on the destination servers to determine if one of the servers is down.

As an end result we have a new Load Balancer in Azure

And the Load Balancer holds a Back End Pool containing our 2 RD Connection Broker servers.

Now that we have an internal load balancer with our 2 RD Connection Broker servers, we need to take one final step to make sure we can start using it. As you can see the RD Connection Broker Load Balancer was configured with the internal IP Address 10.1.9.20. So we now need to make sure that the RDS deployment points to this IP address to start serving incoming connections. The ARM deployment is already configured to create a HA configuration for the RD Connection Broker role.

As also explained in previous articles, this is configured by a PowerShell script inside an ARM CustomScriptExtension. One of the parameters that is passed to the script is the RD Connection Broker DNS name (RD Connection Broker Client Access Name)

It’s this DNS name that is used by clients connecting to the RDS deployment so we need to make sure this DNS name resolves into the internal IP Address of the load balancer. Since we are using the RD Gateway in this deployment as well, more specifically we need to make sure the RD Gateway role can resolve the DNS name. To accomplish this, we create an A record in DNS matching the Connection Broker Client Access Name and pointing to the IP Address of the load balancer.

And that’s it. Incoming connections through the RD Gateway are now send to one of the two RD Connection Brokers load balanced by the Azure Load Balancer.

Restricting access to only allow specific groups

By default a Session Collection provides access to the Domain Users group in Active Directory. In most cases however you want to restrict access to a specific group of users. To accommodate this I added a new parameter to the ARM deployment.

This parameter is added to the Custom Script extension we’ve mentioned several times before. This scripts adds the AD group provided in the parameter and removes the default Domain Users group. Taking a look at the Server Manager console we can confirm that the RDS deployment is now only accessible for members of the specified AD group. This is a permission on the Session Collection itself, in an upcoming article in this series, I’ll also cover applying specific groups to access the RD Gateway components.

No comments:

Post a Comment